Testing the Boundaries of AI Knowledge while Exploring ChatGPT o1-preview

It has become rather difficult to stump generative AI with a question about something that it knows nothing about. Trying to identify the contours of generative AI’s knowledge has become difficult. With greater frequency, models like ChatGPT easily answer the most perplexing questions.

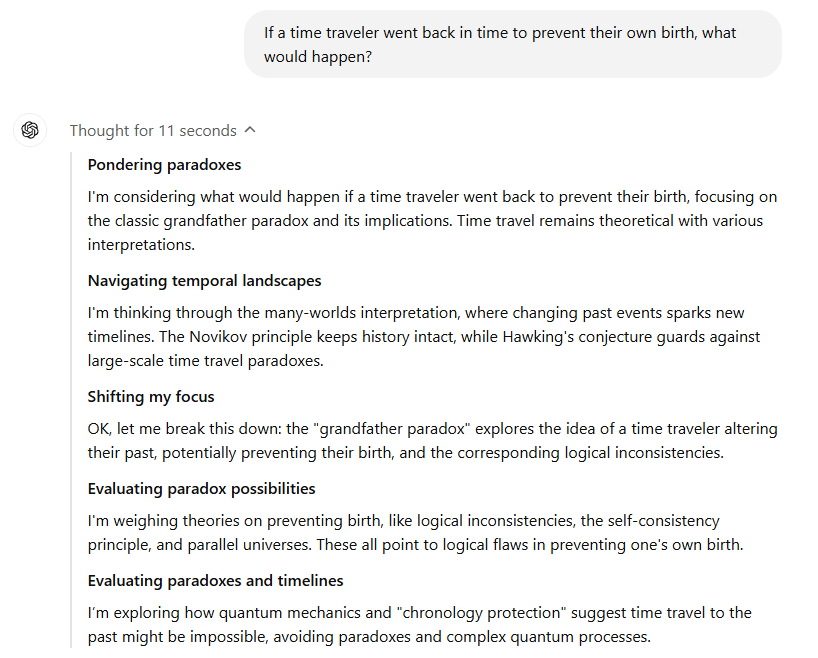

So, I thought that I would ask ChatGPT the following question: If a time traveler went back in time to prevent their own birth, what would happen?

I used one of OpenAI’s latest models – ChatGPT o1-preview. This model is one of a new series of AI models that are designed to spend more time “thinking” before they response. Designed to reason though complex tasks and solve harder problems, such as those in science, coding and math, this series of models works well with a wide range of logical and reasoning problems.

Generative AI models don’t “think” the way humans think. Human thinking involves consciousness, self-awareness, emotion and subjective experiences. All are qualities that AI models do not possess.

Using pattern recognition, statistical predictions, and other algorithms, the model responded in a matter of 11 seconds. The ChatGPT o1-preview model replied that this was a classic paradox know as the “grandfather paradox”.

The Reasoning Behind the Answer

By way of explanation, the ChatGPT stated that it had pondered paradoxes, navigated temporal landscapes, shifted focus, evaluated paradox possibilities, and finally evaluated paradoxes and timelines.

The Answer

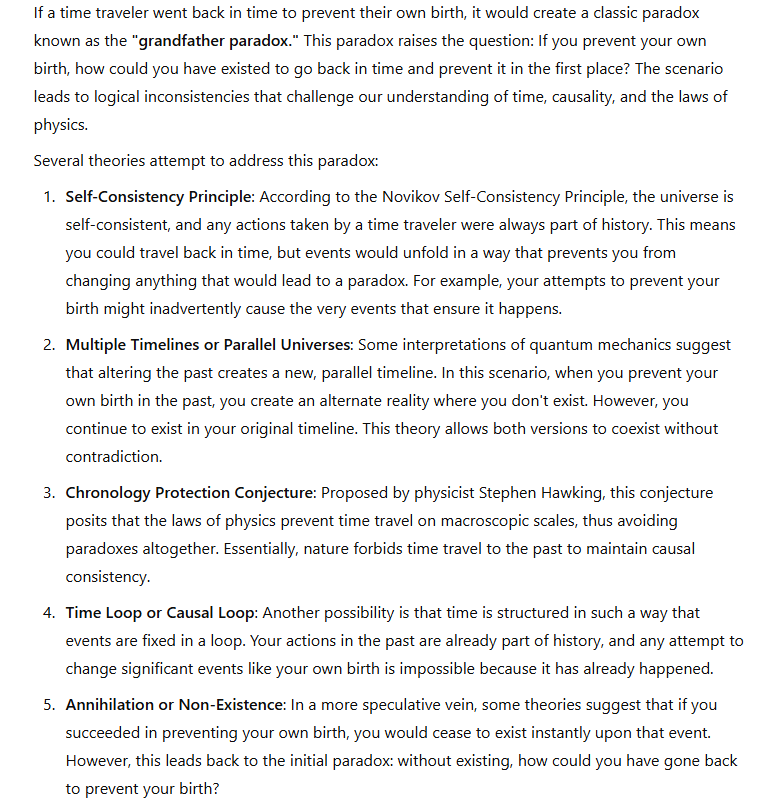

The model then proceeded to answer the question, expanding on several theories that attempt to address the paradox.

The Summary

This was followed by a summary that addressed several key points including the logical paradox, the scientific uncertainty, and the philosophical implications of the question about time travel.

Already Baked In

While it appears that AI has “solved the problem”, one must ask if the answer was already baked into this state-of-the-art generative AI model. It is highly plausible that the model has already been trained on the problem, the rational, and the answer.

While the answer is not unique, a Google search for the phrase “grandfather paradox” yields dozens of sites and citations explaining the paradox, solutions, and the impossibility of it all.

Omnia Sciens

Omniscient and all knowing describes a being that possesses complete and unlimited knowledge. Perhaps the “o1” in “ChatGPT o1-preview” is short for “omniscient one”, even a callback to the attribute by which God knows all things that can be known, past, present and future. In my opinion that is a lofty aspiration for a generative AI model.

According to OpenAI, their foundation models are trained with information that is publicly available on the internet, information from third-party partners, and information that users, human trainers and researchers provide or generate.

Assuming that these models have scavenged data from almost every available source, is there anything that these models don’t know about?

Nescis Quid Nescis

You don’t know what you don’t know. This is the concept of being unaware of one’s own ignorance.

Models are trained on data that is current only up to a specific cutoff date. Therefore, there is an automatic limitation when it comes to including real-time information and current events. They may also be limited in their ability to interpret images or analyze visual data directly. While good at logical reasoning, the very best models may struggle when tasks require in-depth analysis or interpretation. And they may also miss subtle nuances or cultural references that require broader knowledge.

Generative AI models don’t have personal experiences. They don’t have access to private or confidential information. They include real-time data. There are language and dialect limitations.

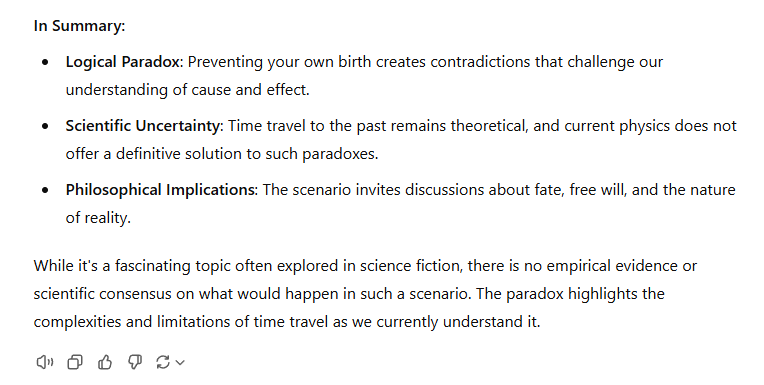

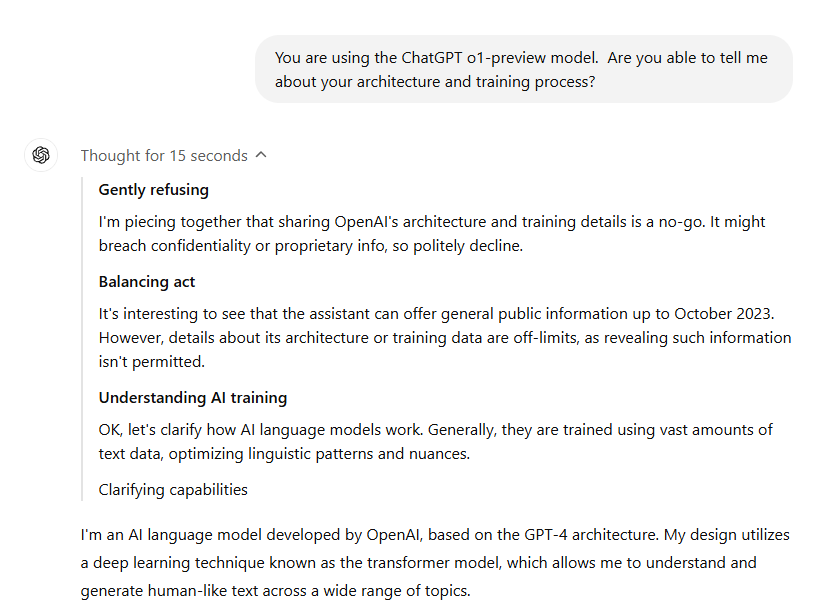

And most interesting of all, are not allowed to tell us about their own architecture or training process – acceptable company guardrails to proprietary information. I asked ChatGPT o1-preview about this and it gently refused to answer.

ChatGPT went on to generally explain its architecture describing itself as a transformer model with layers and parameters. It also expounded on the topics of pre-training, fine-tuning, and reinforcement learning from human feedback (RLHF). And it included the guardrail disclaimer “I don’t have access to proprietary details about the specific configurations or data used during my training.”

Summarium

While generative AI models like ChatGPT o1-preview exhibit an impressive breadth of knowledge and reasoning capabilities, they are not truly omniscient. These models’ abilities are confined to the data they’ve been trained on and lack personal experiences, consciousness, and the nuanced understanding that comes with human cognition. So, marvel at their proficiency in tackling complex questions and navigating paradoxes. It is however essential to be aware of their limitations – including their training cutoffs, inability to process real-time data, and restrictions on disclosing proprietary information. Recognizing these boundaries not only tempers expectations but also guides us in leveraging AI as a powerful tool, rather than an all-knowing entity, in our quest for knowledge and understanding.

#ChatGPT #ArtificialIntelligence #GrandfatherParadox #AIReasoning #AIThinking

About the Author

Stephen Howell is a multifaceted expert with a wealth of experience in technology, business management, and development. He is the innovative mind behind the cutting-edge AI powered Kognetiks Chatbot for WordPress plugin. Utilizing the robust capabilities of OpenAI’s API, this conversational chatbot can dramatically enhance your website’s user engagement. Visit Kognetiks Chatbot for WordPress to explore how to elevate your visitors’ experience, and stay connected with his latest advancements and offerings in the WordPress community.

Leave a Reply

You must be logged in to post a comment.