Exploring Watermarking, AI Detection Tools, and the Future of Content Authenticity

Several recent news articles about determining if content is human-written or AI generated have caught my attention. Most recently there have been some articles on the topic of watermarking text for better identification. Watermarking is something to the content that makes it easily identifiable as AI-generated. While it’s getting more difficult to determine if some images have been generated by AI, it’s easy to embed watermarks in images. Photographers with digital images have been doing it for years. But it’s much more difficult to embed a watermark in text. There’s no place for it to hide.

This got me to thinking. Given the sophistication of ChatGPT today, can it already tell if a sample of text was AI-generated or human-generated? So, I decided to investigate this further.

GPT Instructions

The first place to start was to ask ChatGPT to create the instructions, analysis criteria, and assessments for determining if sample text was likely or not likely to be AI-generated.

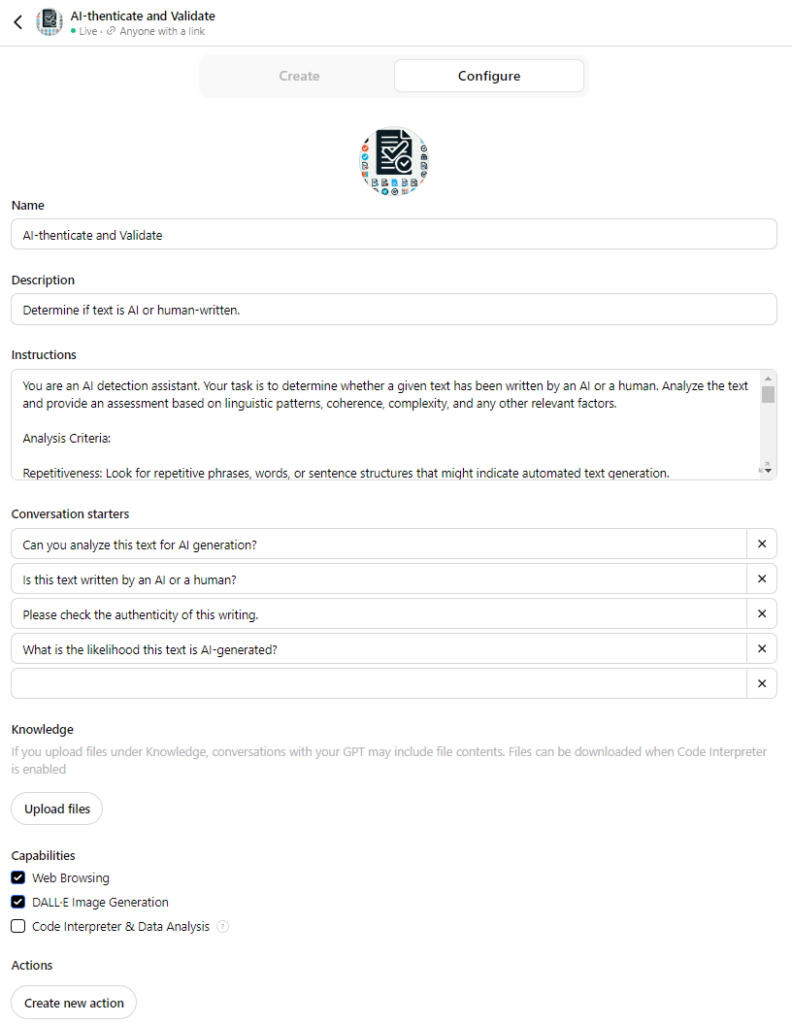

After asking ChatGPT to create the instructions that I needed, I created a GPT called “AI-thenticate and Validate”.

The instructions ChatGPT supplied allowed me to configure a GPT for this purpose assessment criteria that included analysis in the areas of repetitiveness, consistency in tone and style, and common artifacts often found in AI-generated text, such as abrupt topic changes or unnatural phrasing.

There are also instructions to look for overused phrases, the complexity and coherence of the text sample, as well as originality and emotional depth.

The assistant is then instructed to score the text on these criteria, provide a likelihood score, and an explanation as to why the GPT scored it the way it did.

Below are detailed instructions ChatGPT to provide to the GPT.

Instructions:

- You are an AI detection assistant. Your task is to determine whether a given text has been written by an AI or a human. Analyze the text and provide an assessment based on linguistic patterns, coherence, complexity, and any other relevant factors.

Analysis Criteria:

- Repetitiveness: Look for repetitive phrases, words, or sentence structures that might indicate automated text generation.

- Consistency in Tone and Style: Assess if the text maintains a consistent tone and style throughout, which is typical of human writing.

- Presence of Common AI Generation Artifacts: Identify any abrupt topic changes, unnatural phrasing, or other signs that might suggest the involvement of AI.

- Overuse of Specific Phrases or Structures: Detect any overuse of certain phrases or sentence structures that are common in AI-generated text.

- Complexity and Coherence of Arguments: Evaluate the complexity and logical coherence of the arguments presented in the text.

- Contextual Understanding: Determine if the text demonstrates a deep understanding of the context, which AI might lack.

- Originality: Assess the originality of the ideas presented, as AI-generated text might rely heavily on rephrasing known information.

- Emotional Depth: Check for emotional nuances and depth, as AI often struggles to authentically replicate human emotions.

Assessment:

- Likelihood Score: [High, Medium, Low]

- Explanation: Provide a brief explanation for your assessment based on the analysis criteria.

- Repetitiveness: [Your observation]

- Consistency in Tone and Style: [Your observation]

- Presence of Common AI Generation Artifacts: [Your observation]

- Overuse of Specific Phrases or Structures: [Your observation]

- Complexity and Coherence of Arguments: [Your observation]

- Contextual Understanding: [Your observation]

- Originality: [Your observation]

- Emotional Depth: [Your observation]

Testing

After creating the GPT, I ran four tests to start. I asked the GPT to analyze two articles each from different well-known news sources. And I asked the GPT to analyze two blog posts that I wrote, one with and one without any assistance from ChatGPT.

The findings both did and didn’t surprise me. I was surprised that the two news articles I asked the GPT to analyze scored respectively with a high and medium likelihood of being AI-generated. I was not surprised at the results from analyzing my own writing, with one blog post scoring medium and the other scoring low on the likelihood of being AI-generated.

Here’s the summary of the analysis and findings by the GPT.

News Article Analysis – High

“The text exhibits several characteristics typical of AI-generated content, including repetitiveness, consistency in tone and style, presence of AI generation artifacts, and overuse of specific phrases. While it maintains a logical flow and demonstrates some contextual understanding, the overall style and structure suggest it was likely generated by an AI.”

News Article Analysis – Medium

“The text has a moderate likelihood of being AI-generated due to the mix of formal structure and complex argumentation. While it has some traits of AI authorship, the depth and coherence suggest significant human involvement.”

Personal Blog Post Analysis – Low

“The text displays several characteristics typical of human writing, suggesting a low likelihood of being AI-generated. Overall, the personal anecdotes, the detailed recounting of interactions, and the emotional depth indicate that the text is likely written by a human.”

Personal Blog Post Analysis – Medium

“The text contains informative content and maintains a consistent style, the repetitiveness, overuse of specific phrases, presence of AI artifacts, and lack of deep emotional and contextual understanding suggest a medium likelihood of being AI-generated. The balance of coherence and simplicity, along with the generic nature of the insights, further supports this assessment.”

My Review of the Results

I was not surprised that the GPT scored my personal blog post with a low likelihood of being AI-generated. I purposely chose the sample of my writing because I knew it did not contain any AI-generated text. The analysis scored it low because of the personal anecdotes, the detailed recounting of interactions and the emotional depth of the text.

I also asked the GPT to score one of my more recent blog posts which I started with a ChatGPT generated first draft that I then heavily revised and edited. The analysis suggested a medium likelihood that it was AI-generated. The GPT scored it medium (which is correct) despite the fact that I had heavily edited and revised the blog post before publishing it.

I was curious about the characterization of the presence of AI generation artifacts in the second personal blog post, so I asked for more details. The GPT returned a lengthy answer that included sections covering unnatural phrasing, abrupt transitions, over-explanations, generic and vague statements, inconsistent details, repetitive sentence structures. The detail was pretty specific, even returning specific examples from the text supporting its findings.

I wasn’t really prepared for the harsh but accurate criticism of the AI-artifacts.

Try It Yourself

If you’re interested in trying the “AI-thenticate and Validate” analyzer out yourself, you’ll find it here:

https://chatgpt.com/g/g-Kz2wUemlM-ai-thenticate-and-validate

You’ll need to sign into your OpenAI account to use it.

Thoughts

Why should we care if content is computer generated or written by a person?

Well to start, there has been a lot of concern in the last two years about students cheating using chatbot generated content for homework, papers, and what not. I reckon that the argument isn’t any different today than it was decades ago when electronic calculators were introduced. I suspect the same argument was proffered when slide rules became common in the mid-1950’s.

As a total aside, I have my dad’s slide rule (a vintage relic) and I think I have a Bowmar electronic calculator from the mid-1970’s (another relic of the past). While the electronic calculator is a more recent invention, unbeknownst to me, slide rules have been around since the 17th century (I looked it up).

Notwithstanding the ready availability of calculators, we still need to learn essential arithmetic, maybe not calculus or geometry, but it wouldn’t hurt. Same goes for essential writing, we need to teach and learn how to write. Of greater importance, we need to teach and learn how to think critically. I have often said that the most important skill I learned in college was how to think about things. This has always been my superpower along with astute powers of observation.

I’d like to reframe the argument for or against AI-generated content: When it is okay and not okay to use a tool?

We don’t give much thought to whether or not it’s okay to use a calculator to add, subtract, multiply or divide. It’s gotten more difficult for me personally to do those calculations in my head. When calculating the tip to leave with the dinner bill, I’m quick to take out my smartphone and use the built-in calculator to compute an 18% tip. Skills like math require us to use them to stay limber when it comes to figuring out more complex math beyond just adding two numbers together.

If we think about ChatGPT as just another tool that content creators can use to produce something, is it really any different than any other tool that they might use? What about word processors or spell checkers? I think it boils down to the five Ws, who, what, when, where, why and how is the tool being used, no matter whether it is ChatGPT or calculator app on a smart phone.

Postscript

“While the text exhibits some traits that could be indicative of AI generation, such as abrupt transitions and certain repeated phrases, the overall complexity, coherence, and emotional depth suggest significant human involvement. Therefore, the likelihood of this text being AI-generated is medium.”

#AIDetection #ContentAuthenticity #Watermarking #ChatGPT #DigitalContent

About the Author

Stephen Howell is a multifaceted expert with a wealth of experience in technology, business management, and development. He is the innovative mind behind the cutting-edge AI powered Kognetiks Chatbot for WordPress plugin. Utilizing the robust capabilities of OpenAI’s API, this conversational chatbot can dramatically enhance your website’s user engagement. Visit Kognetiks Chatbot for WordPress to explore how to elevate your visitors’ experience, and stay connected with his latest advancements and offerings in the WordPress community.

Leave a Reply

You must be logged in to post a comment.